New Vitastor Storage Layer (with WA=1)

How to Enlarge your Iops again

Vitaliy Filippov

Vitastor

- vitastor.io

- Distributed SDS

- Original design goals:

🚀 Low latency (~0.1 ms)

🚀 Low CPU usage - Block + FS and S3

- «Smart», not Network-RAID

3 years of development

Examples:

0.6-0.7 — A lot of crash/hang fixes

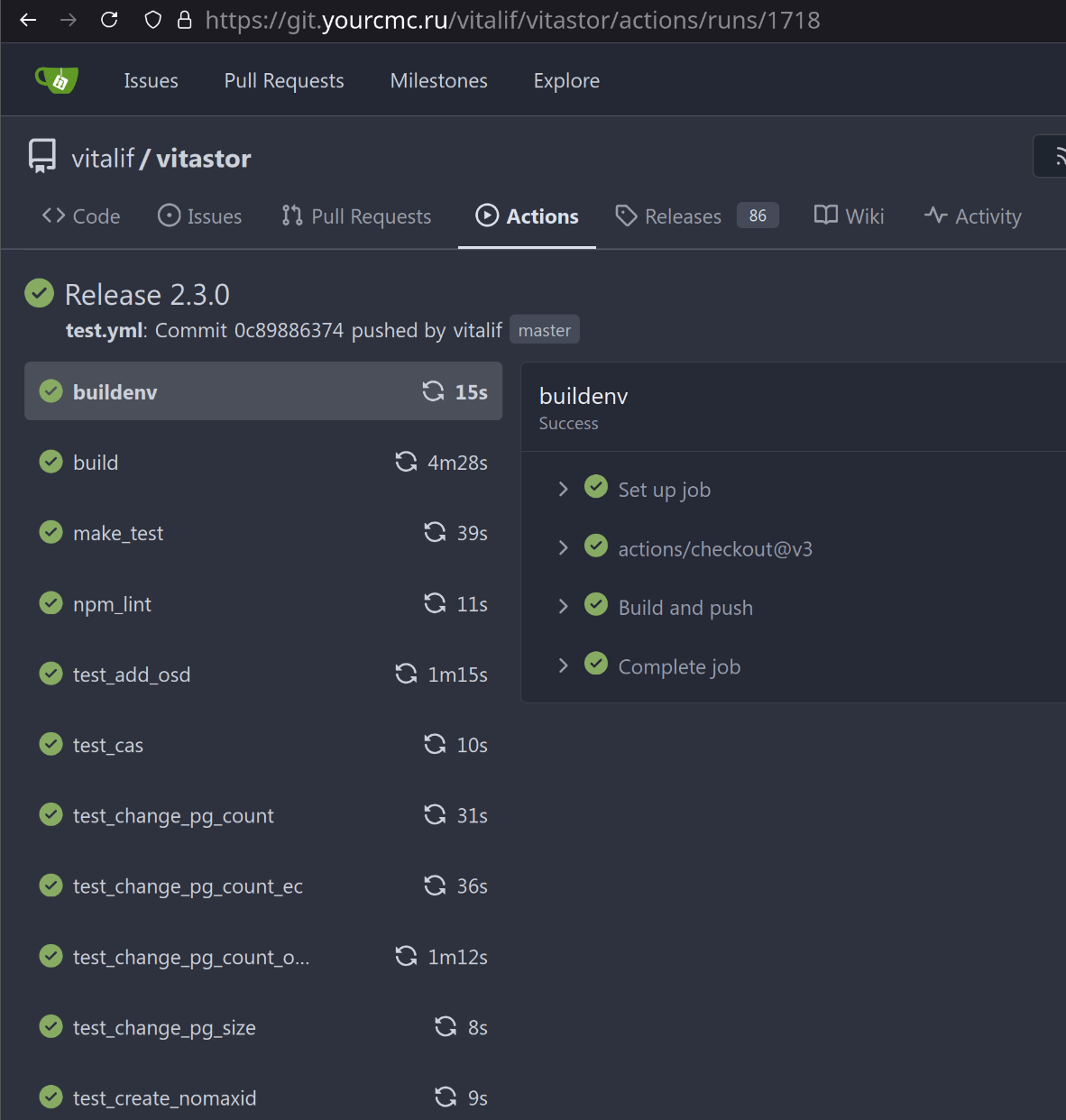

0.8.9 — Test stability and CI

1.3.0 — RDMA without ODP

1.4.0 — VDUSE in CSI

1.6.0 — ENOSPC handling

1.10.1 — Failover speedup

- Stability, CI

- Site and documentation

- A lot of new features

- Convenience

0.7.0 — NFS

1.0.0 — Checksums

1.5.0 — VitastorFS & KV 😎

1.7.0 — I/O threads, Antietcd

1.9.0 — OpenNebula

2.0.0 — S3 😎😎

2.2.0 — Local reads

0.8.0 — udev & vitastor-disk

0.8.7 — Online configuration

1.4.0 — Rebalance auto-tuning

1.6.0 — Hierarchical failure domains

1.7.0 — Prometheus

1.9.x — dd, resize, block_size auto-guess

1.10.0 — RDMA auto-configuration

What didn't change?

Block storage layer based on the American blueprints 🤣

Old storage layer at a glance

Store contract

- ID: inode:offset, 128 KB objects

- Partial read/write

- Version number++ on every write...

- Atomic write!

(network is less stable than disk) - 2-phase write for EC (write → commit)

(to fight "raid write hole")

Advantages

- No file system overhead

No Copy on Write overhead

⇒ Low CPU usage - Small meta — 36 B per object (~290 MB / 1 TB)

- Low latency ≈ disk latency

- Journal ↔ write buffer (SSD+HDD)

Drawbacks

-

Write Amplification for 4 KB — 3-5x

-

2x metadata in memory

- EC: 1 uncommitted entry → OSD hangs

- Non HDD friendly (random write)

- (!!!) No space for extensions

Metadata extensions

- Tombstones 🪦

- Atomic delete

- Discard

- Distributed SSD cache (tiering)

- Dynamic data blocks

- Compression

- Local SSD cache (bcache)

The First Idea

The Second Idea

Append to the end

The Second Idea

😊 Amortized writes ⇒ 1x RAM, WA ↓↓

The Second Idea

😢 Need Compaction (LSM?..) ⇒ WA ↑↑, CPU ↑↑

SUDDENLY

— We already have a paper-punch at home

Paper-punch at home:

Idea № 3

Instead of Compaction...

We can just track garbage

Side effect

How to avoid?

Put each object entries to a single block...

...But that's not LSMeta anymore, and WA ↑↑

However, the idea attracted me

NonLSM: heap-meta branch

- 65 files changed, 11489 insertions(+), 6655 deletions(-)

- Passes all tests

- cpp-btree → 🤣

swissmaprobin_hood_map - Atomics (on the next slides)

- Result: 176000 randwrite (~1.75x)

- 😢 Ugly hacks (object is limited by 1 block)

- 😢 WA — exactly 3x or 2x

Return to LSMeta

Decided to rewrite it once more

WA 1 is worth the invalidation 😇

Buffer area instead of the journal

Hashmap and linked lists in RAM

Side note

It's absolutely pointless if you don't optimize CPU usage!

Memory copying, (de)serialization, threads, mutexes... 👎👎👎

Write Amplification

Let's make a CoW FS?

Linux 6.11 To Introduce Block Atomic Writes –

Including NVMe & SCSI Support

- New RWF_ATOMIC flag (to avoid request fragmentation)

- New parameters in /sys/block/*/queue/

Atomic writes in NVMe

Atomic Write Unit Power Fail (AWUPF): This field indicates the size of the write

operation guaranteed to be written atomically to the NVM across all namespaces with

any supported namespace format during a power fail or error condition.

— that's what we need!

Samsung PM9A3 😢

Micron 7450 / 7500

Kioxia (Toshiba) CD7-R / CD8-R

More atomicity

- SCSI: WRITE ATOMIC (enterprise)

- NVMe: atomic_block = (AWUPF+1) * block

- SSD: 4 KB is the translation block (sometimes more)

⇒ 4 KB writes on SSD/NVMe are always atomic!

...cool, Vitastor already relies on it 🤣

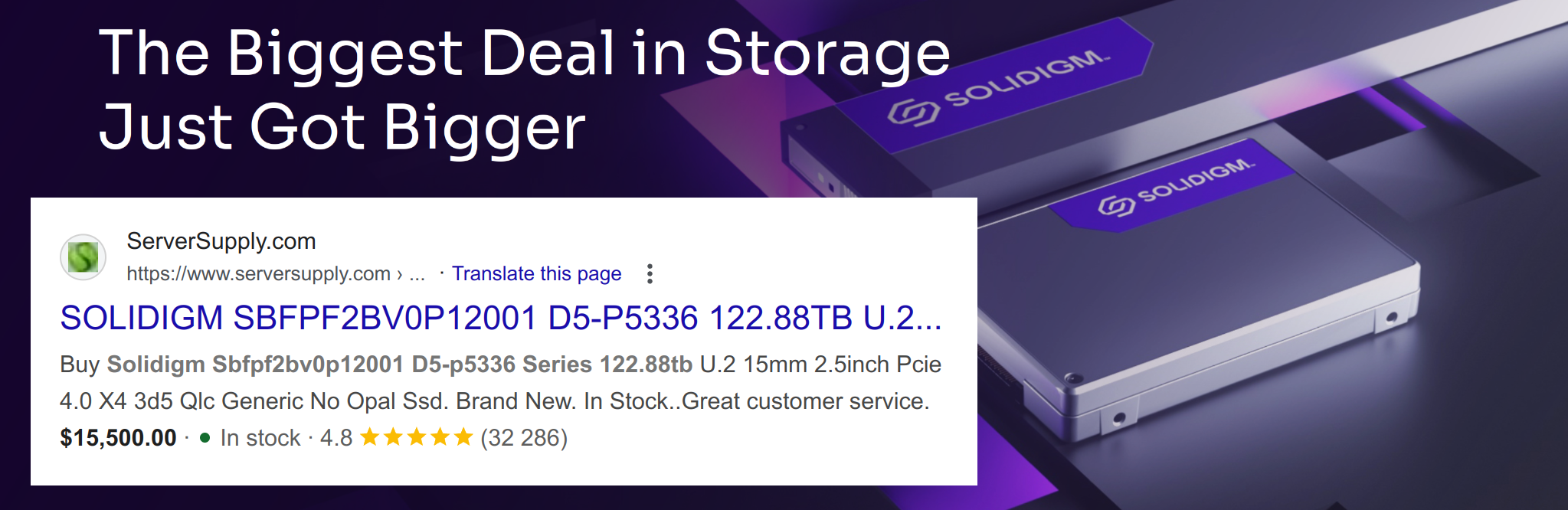

Overgrown SSDs

122 TB D5-5536 — 16 KB block

HDD

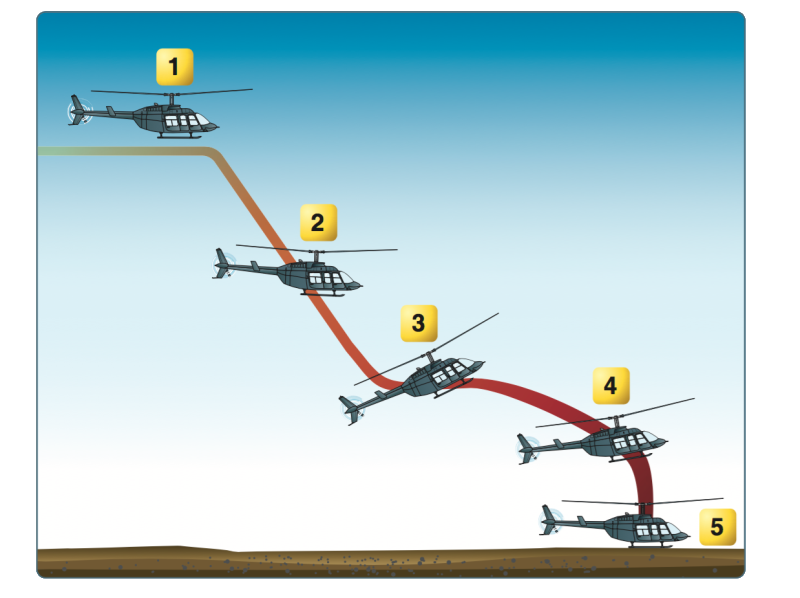

Experiments with Micron 7450

Write xxx KB blocks → pull the cable → check

- USB-NVMe — does not work (buffering?)

- PCIe — OK! but atomic_write_max_bytes = 128 KB — ?

- max_hw_sectors_kb = 128 — ?

- Linux kernel hardcode. iommu on → 128 KB, off → 256 KB

- Conclusion: IT WORKS!

How to utilize it

MySQL: innodb_doublewrite=OFF

Vitastor: ???

How to utilize it

Write Intents

- "I'm going to update the block, CRC32=..."

- Write directly to the data disk

- Check CRC32 if we crash

Mismatch ⇒ old version

Matches ⇒ new version

LSMeta + Write Intent branch

- 36 files changed, 3229 insertions(+), 3708 deletions(-)

- WA ~1.0x!

-

Micron/Kioxia or

and only 4 KB writes

2x latencysame latency in practice — ~29 μs!

randwrite 4 kb results (fio_blockstore)

Memory usage

| What do we store | |

|---|---|

| 2.4.1 | Raw blocks + cpp-btree + journal |

| heap | Blocks with 20% reserved space + hashmap |

| lsmeta | Separate malloc's + hashmap + linked lists |

Memory usage per 1 TB*

| SSD | SSD (256 KB) | HDD (1 MB) | ||

|---|---|---|---|---|

| 2.4.1 ≈ | 663 MB | 371 MB | 152 MB | |

| heap | 740 MB | 412 MB | 165 MB | +11% |

| ...but in the worst case — the whole metadata area 🤣 | ||||

| lsmeta | 785 MB | 456 MB | 154 MB | +18% |

* without checksums (128 MB .. 1 GB per 1 GB more RAM)

Smart SDS with RAI(N) speed

- The release is coming 😊

- WA 1 — not a bottleneck anymore!

- New store is an option

- Need Micron/Kioxia

- Come to the chat for a test build 😇

- Wait a bit before production 😇

Contacts

- Vitaliy Filippov

- vitastor.io

t.me/vitastor

- vitalif@yourcmc.ru

- +7 (926) 589-84-07